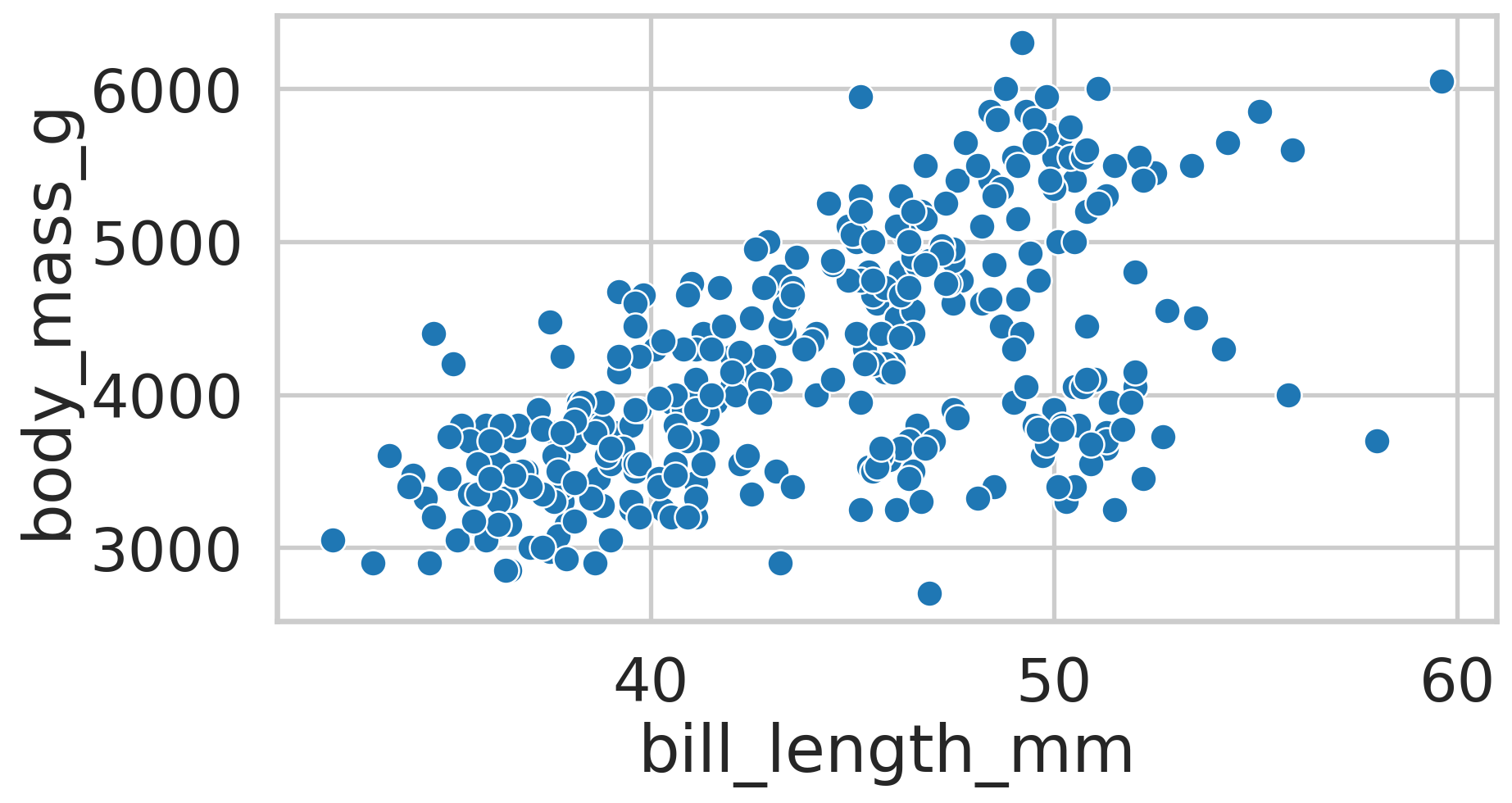

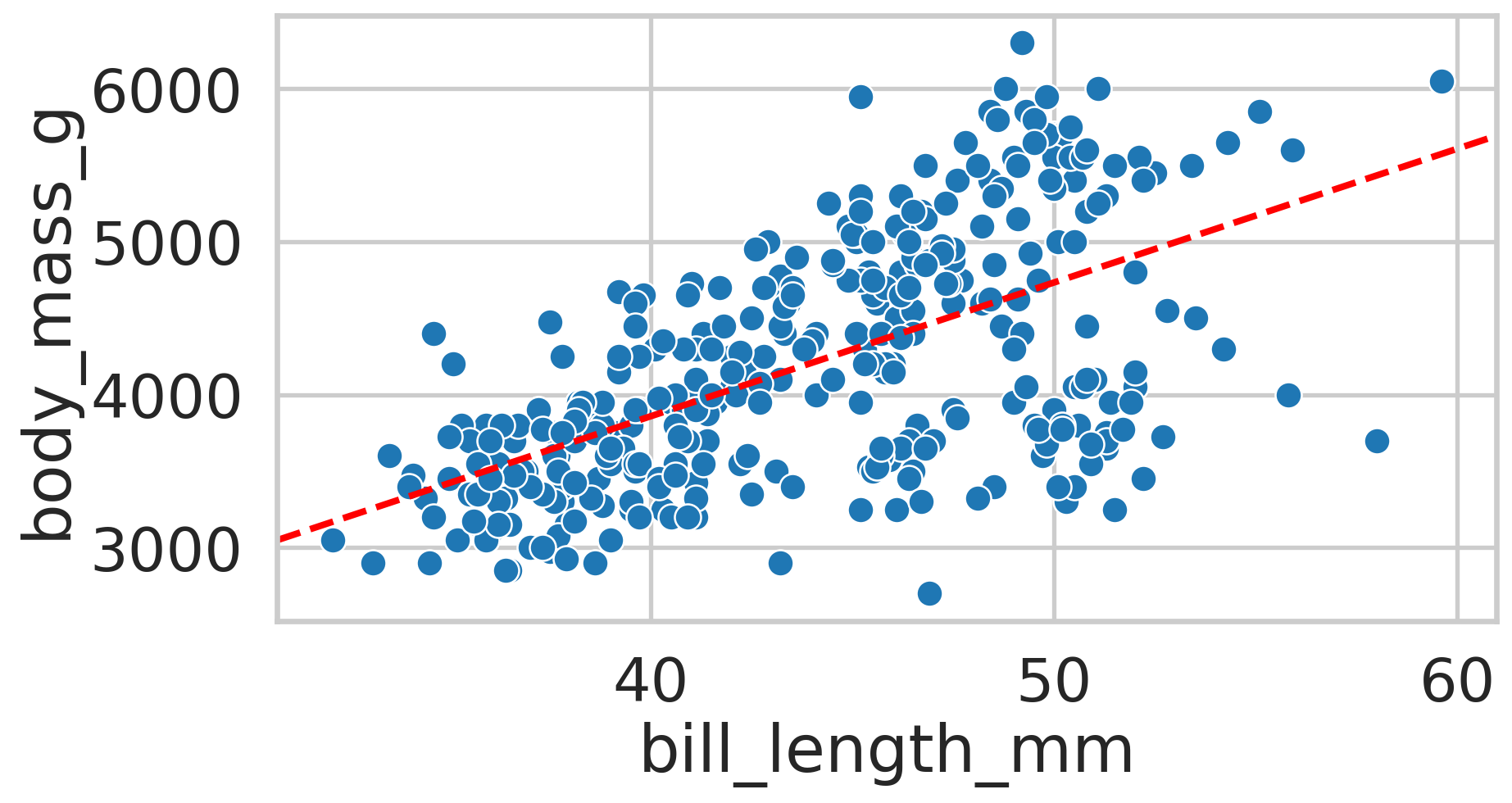

| species | island | bill_length_mm | bill_depth_mm | flipper_length_mm | body_mass_g | sex | year | |

|---|---|---|---|---|---|---|---|---|

| 0 | Adelie | Torgersen | 39.10 | 18.70 | 181.00 | 3,750.00 | male | 2007 |

| 1 | Adelie | Torgersen | 39.50 | 17.40 | 186.00 | 3,800.00 | female | 2007 |

| 2 | Adelie | Torgersen | 40.30 | 18.00 | 195.00 | 3,250.00 | female | 2007 |

| 3 | Adelie | Torgersen | NaN | NaN | NaN | NaN | NaN | 2007 |

| 4 | Adelie | Torgersen | 36.70 | 19.30 | 193.00 | 3,450.00 | female | 2007 |

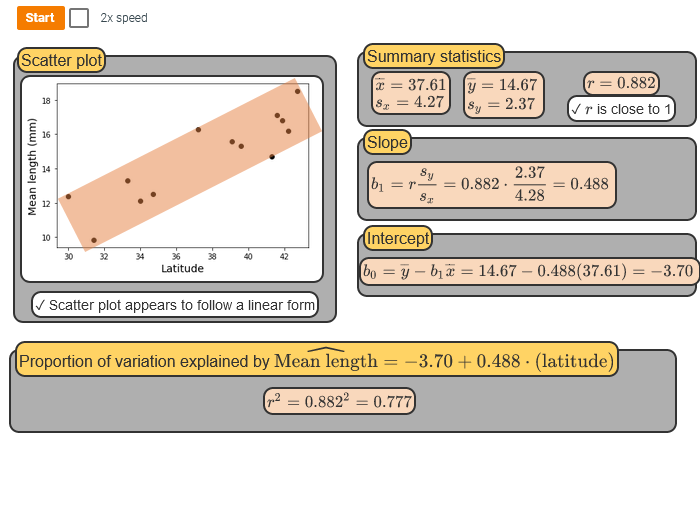

Main Equation

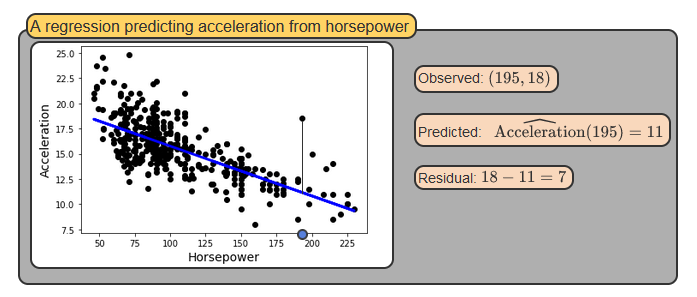

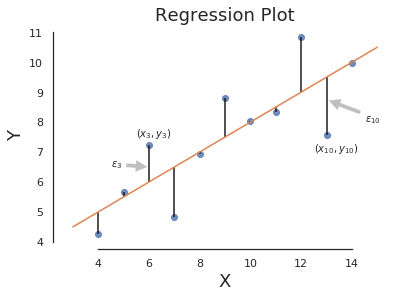

- Set of data: \(\{(x_1, y_1), (x_2, y_2), ..., (x_n, y_n)\}\)

- x: input, y: output

- Model: \(\hat{y} = \beta_0 + \beta_1 x + \epsilon\)

- \(\hat{y}\) = prediction

- \(\beta_0\) = y-intercept

- \(\beta_1\) = slope

- \(\epsilon = error\)